Introducing Odd.io

A new audio-based social network with a seamless, endless feed.

Jump to…

My role in Odd.io:

User experience research

Concept and ideation

Visual design

Team Members:

Kyle Coy Barron, Denny Check, Jackie Hu, Rituparna Roy, Nat Schade, Michael Silvestre

Tools:

Figma, Photoshop, iMovie, Zoom (for conducting remote user tests)

Methodology:

A/B testing, Storyboarding, Interviews, Surveys, Speed dating, Experience prototyping

What is Odd.io?

Opportunity Space

Enter Odd.io, an audio-based social network platform which aims to decrease screen fatigue and increase vocal engagement across friends and family online. Audio is an under-explored social media medium, and has the potential to ameliorate some visual stimulus overload. Audio entertainment is becoming a constant background companion for more people than ever before with the rise of fully wireless earbuds, smart speakers and new audio formats like podcasts.

While Gen Z and Millennials are increasing their consumption of audio content, there is currently no popular audio based social media platform. Odd.io presents people with a unique social media platform through the utilization of conversational user interfaces (CUI).

Problem Space

Odd.io fulfills unmet user needs in the current social media ecosystem. Screen fatigue is an increasing problem as more people are spending more time on screens. The volume of content posted on social media is constantly increasing, but users have a desire to reduce the portion of their day they spend looking at a screen.

Researching Users’ Relationships with Audio Media

Storyboards and speed dating

“Research goal: explore how users prefer to interact with audio posts”

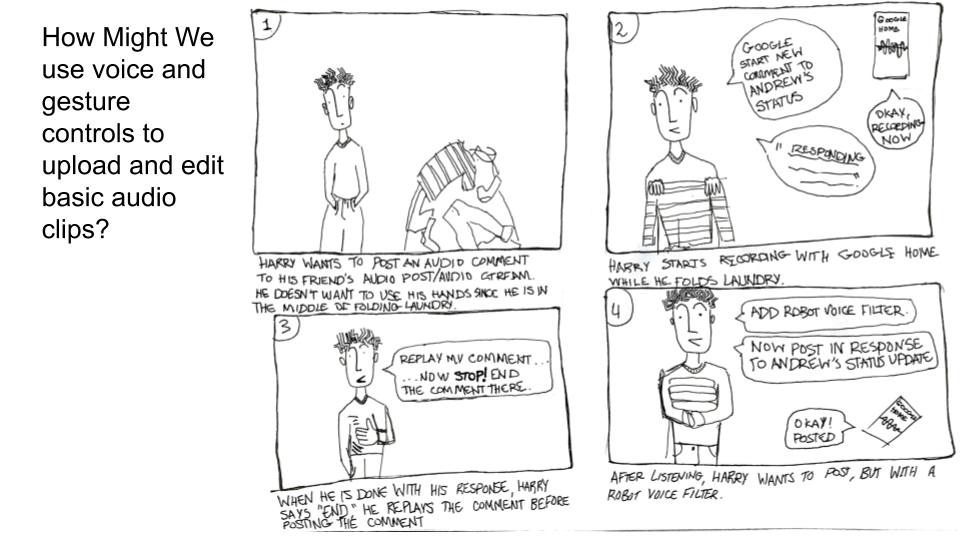

The team conducted two rounds of storyboarding to test and verify our initial hypotheses on how people wanted to consume audio based social media content. The interactions that we looked into included commenting, audio editing (such as voice filters), voice controls, gesture and visual controls, and more.

Each storyboard was built around a “how might we?” statement. We speed dated the storyboards wherein the facilitator showed the individual participants each storyboard one at a time. As the participants viewed each storyboard, they were asked to speak about their initial reactions to the story, about how they felt about doing the actions in the storyboard themselves.

For the first round, we showed six participants thirteen different storyboards

Round 1 Results

Positive Reactions: There were extremely positive responses to the idea of humorous, voice distorting audio filters. People were also interested in to listening to audio threads as a way to interact with their friends on a social media platform. This idea was predicated on the idea that they would be listening to their friends, not to a transcribed audio read by a CUI (such as Alexa or Google Home). People seemed receptive of, and comfortable with, the idea that some sort of visual would be involved in the platform. The final idea that was positively received by the participants surrounded the ability to share clips of audio/podcast content with friends.

Negative Reactions: We also found three areas to which people had strong negative reactions. People did not like the idea of using clapping or gestures to control an audio based social media site. To many, these interactions presented far too large an opportunity for error. There were also concerns about decreased functionality without a visual interface. Without a visual, people worried that they would make mistakes, and participants expressed a large fear that they would accidentally upload something to the social media platform.

For the second round, we showed four participants five different storyboards.

Round 2 Results:

Positive Reactions: We received positive responses from all of the participants to the idea of funny audio based sound filters. This is an idea that also received positive feedback in the first round. In the second round, we presented ideas that were received well the first time, as well as new ideas. One of the new ideas that was received very positively, is the idea of having integrated visual aids that convert the audio to text. Participants felt that this would increase accessibility and usability during situations where listening to audio is not feasible.

Negative Reactions: The idea that received the largest amount of negative feedback in this round was about environmental sound needs, as the participants largely felt that need was already covered by existing options.

Survey

As audio social interaction is not popularly applied in the market yet, we used a survey to reach out to a larger audience group and validate some of our broad assumptions, before conducting more detail-oriented qualitative research.

“Research Goal: understand preferences for audio consumption and areas of interest for audio social media”

When you encounter social media content with sound (Instagram video, etc), do you turn on your volume and listen to it?

Do you usually multitask when consuming audio content?

Would you prefer an audio social media that you can listen to hands-free or with supplementary visual content?

Thirty-seven people answered the survey of sixteen questions with a mix of quantitative questions (how many people choose X) and qualitative questions (open-ended responses).

Key takeaways:

97.3% of the participants indicated that they usually multitask when playing audio. Suggesting that people listen to audio as a secondary or tertiary task used to complement a primary task.

People avoid opening audio content in a public setting when they are not wearing headphones. Participants don’t open audio and video on social media in public because they don’t want to disturb others if they don’t have their headphones or earphones in.

Audio is most likely to be consumed while people are in commute, cooking, or doing work.

People are interested in listening to stand-up comedy, talk shows, and event commentary on an audio social media platform.

The survey informed us people don’t have a strong preference between using audio social media hands-free or with GUI. Short answer responses fueled the assumption that these preferences are informed by infrequent usage and a general distrust of conversational agents.

Pretotype

“Research goal: understand how people respond to byte size audio content

”

Based on the results of our previous methods, the team developed a “pretotype” to gauge how users would actually feel about specific elements of our idea. We wanted to understand how people would react to threads of their friend’s audio. This was something that people expressed interest in, but we wanted to understand how an actual thread would affect people. People were interested in the idea, but without any current example of the idea, people had no experience with which to consider this idea.

After listening to the string of audio clips, each participant was asked questions about their listening experience such as: “What did you think of these clips? Would you listen to something like this? How would you like to interact with the audio?”.

We told each participant they were about to listen to an audio-only social media app, in which their friends were talking about their day.

Usability results: The ability to use this app hands free meant a great deal to participants. The idea of being able to do something like the laundry or cleaning the dishes while listening to social media was very powerful for some of our participants.

Suggested Concepts and Features: Participants wanted to communicate or interact with their friends through the app, rather than endlessly listening to individual clips. Some type of back-and-forth was necessary to make Odd.io worthwhile.

Additionally, they wanted the following abilities:

Skimming audio

Adjusting playback speed

Voice filters

New content discovery

Tags and comment based sorting system

“Standard” social media features such as following, commenting, and liking

Parallel prototype testing

“Research Goal: explore the level of comfort that people have using voice commands and the minimum visuals needed to optimize the interaction. ”

We designed the prototyping phase to analyze CUI interactions on social media platforms, and understand how users listen to posts, comment on posts, create posts, and adjust their feed. Parallel prototyping tested two potential formats for audio based social media platforms. One prototype was a conversational user interface (CUI) and one mixed conversational and graphic user interfaces (GUI).

Both prototypes tested commenting and categorization features, and used “Wizard of Oz” to simulate working CUIs. We conducted eight sessions, four participants were asked to complete tasks for the CUI, and the other four were asked to complete the same tasks using the mixed CUI and GUI.

CUI Prototype: focused on the audio-only facing interactions, in which participants navigated our app using purely voice commands. We provided a list of potential voice commands for participants to reference while engaging with the prototype.

Participants experienced increased frustration using audio-only controls, and wanted a screen for visual interactions, feedback, and feedforward. Most users were not familiar with using voice commands, and were uncomfortable with the idea of using them in public spaces. One of the major outcomes of the CUI prototype testing was the addition of a consistent on-boarding tutorial. This addition gives users the chance to take their own time getting used to the voice commands.

Mixed CUI/GUI Prototype: explored the idea of audio with supplementary visual stimulus. Participants were still asked to use voice commands, but had the option to navigate the app through more common interactions involving tapping, scrolling, or swiping visual elements on a screen.

Ultimately, users were much more comfortable with the mix of interfaces when compared with the CUI prototype.

Prototyping Odd.io

Overview

As illustrated in the research sections above, we started with a simple audio-only prototype, a demo version of what one’s feed might sound like. We used feedback on this prototype to better understand the overall viability of the concept, as well as what situations and settings users see themselves using Odd.io in. Users highlighted active situations (driving, cooking, working, cleaning, etc.) as key times to use Odd.io. This pointed us in the direction of a hands-free or audio-based interaction system that would let users perform some familiar social media tasks such as liking, saving, and responding to posts.

Modeling the interaction

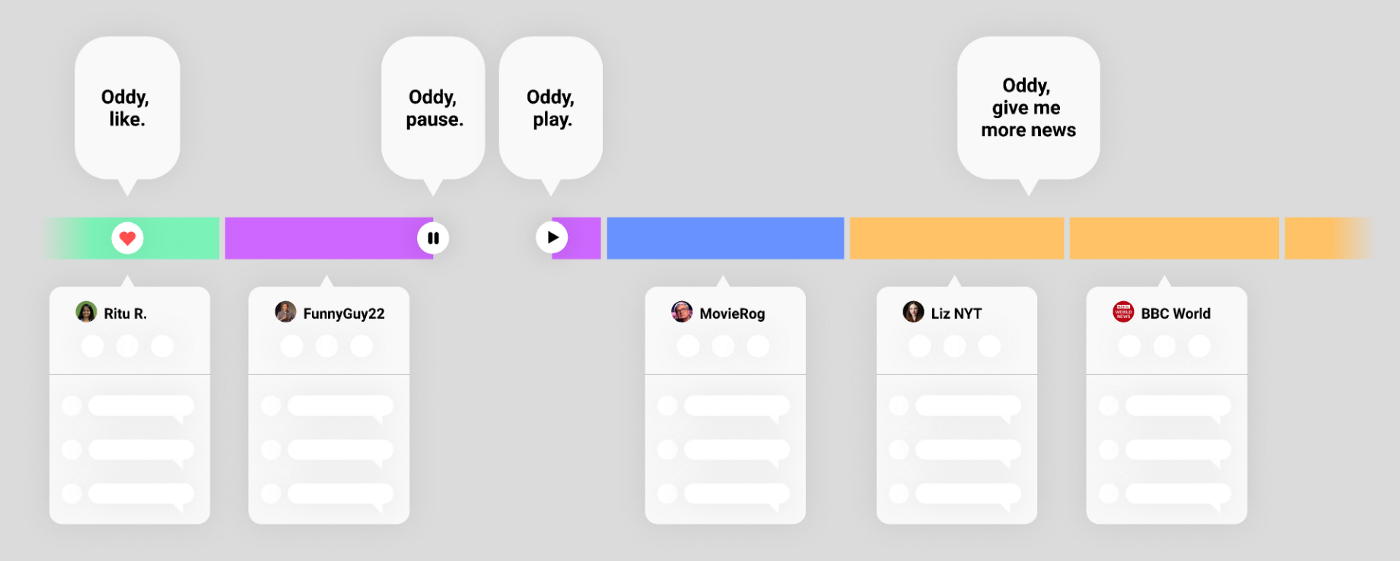

A conceptual model of the Oddio feed, with CUI-based interaction points at top, and a GUI view at bottom.

In our next iterations, we tested specific interactions in audio and visual format to understand which features were most desired, which interactions required visual interfaces and feedback, and which could be accomplished via a conversational user interface. Ultimately, we found that users were comfortable accomplishing basic tasks (passive listening, liking, following, and feed navigation) in a pure audio CUI, while a visual interface could be a home for less frequent or more nuanced interactions like editing one’s social graph, adjusting content preferences or creating richer posts.

This led Odd.io to be a mix of visual and conversational interface, but include a hands-free, CUI only, mode for listening, browsing, and commenting. Additional features added to the final prototype based on previous testing include:

Adjusting GUI and VUI elements to provide better feedback and feedforward

Providing audio-to-visual transcription

Commenting in real-time audio on audio clips, allowing for a targeted experience

Providing an array of freely available audio reactions for an enhanced experience, similar in purpose to emojis and gifs

Creating audio snippet sharing options, so users can send and receive relevant pieces of clips within their social network

Odd.io’s latest iteration

How does odd.io work?

“Hey Oddie! Play my feed”

On Odd.io users upload audio clips of up to 60 seconds. These bite-sized clips are then strung together into a seamless “feed” of audio clips for other users to listen to. Like the visual feeds of applications such as Instagram, Twitter and TikTok, the Odd.io stream is functionally endless and can incorporate content from the user’s network as well as algorithmically-derived content based on users’ wants and behaviors.

Our latest iteration positions the user on a central “listen” screen when they open the app — from here, the most common use case would be to hit “play” and exit the app to let Odd.io run in the background, conversing with the app via a CUI assistant from there on out. A slider allows for enhanced control during exploration. We also adjusted GUI and VUI elements to provide better feedback and feedforward for using the platform.

In other cases, users (especially power users) may swipe to the posting screen where they can compose audio clips and tag them with specific categories and tags. Another view lets users adjust their algorithm to feed them clips of a certain type (news, comedy, personal, reviews, etc.)

We believe Odd.io represents a novel concept for audio social media with real-world viability after further refinement.